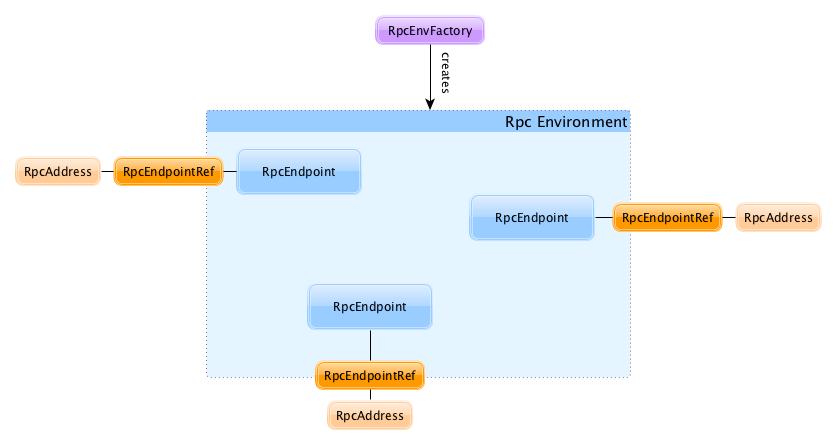

RpcEnv

RPC Environment (aka RpcEnv) is an environment for RpcEndpoints to process messages. A RPC Environment manages the entire lifecycle of RpcEndpoints:

- registers (sets up) endpoints (by name or uri)

- routes incoming messages to them

- stops them

RpcEndpoint

RpcEndpoints define how to handle messages (what functions to execute given a message). RpcEndpoints register (with a name or uri) to RpcEnv to receive messages from RpcEndpointRefs.

RpcEndpoints 定义了如何处理消息(对指定消息执行指定功能),它向RpcEnv注册并接收来自RpcEndpointRefs的消息

A RpcEndpoint can be registered to one and only one RpcEnv.

The lifecycle of a RpcEndpoint is onStart, receive and onStop in sequence.

receive can be called concurrently.

Tip: If you want receive to be thread-safe, use ThreadSafeRpcEndpoint.

RpcEndpointRef

A RpcEndpointRef is a reference for a RpcEndpoint in a RpcEnv.

It is serializable entity and so you can send it over a network or save it for later use (it can however be deserialized using the owning RpcEnv only).

A RpcEndpointRef has an address (a Spark URL), and a name.

You can send asynchronous one-way messages to the corresponding RpcEndpoint using send method.

You can send a semi-synchronous message, i.e. “subscribe” to be notified when a response arrives, using ask method. You can also block the current calling thread for a response using askWithRetry method.

RpcAddress

RpcAddress is the logical address for an RPC Environment, with hostname and port.

RpcAddress is encoded as a Spark URL, i.e. spark://host:port.

RpcEndpointAddress

RpcEndpointAddress is the logical address for an endpoint registered to an RPC Environment, with RpcAddress and name.+

It is in the format of spark://[name]@[rpcAddress.host]:[rpcAddress.port].

Ask Operation Timeout

Ask operation is when a RPC client expects a response to a message. It is a blocking operation.

You can control the time to wait for a response using the following settings (in that order):

spark.rpc.askTimeout

spark.network.timeout

Their value can be a number alone (seconds) or any number with time suffix, e.g. 50s, 100ms, or 250us. See Settings.